Additive model

In statistics, an additive model (AM) is a nonparametric regression method. It was suggested by Jerome H. Friedman and Werner Stuetzle (1981) and is an essential part of the ACE algorithm. The AM uses a one dimensional smoother to build a restricted class of nonparametric regression models. Because of this, it is less affected by the curse of dimensionality than e.g. a p-dimensional smoother. Furthermore, the AM is more flexible than a standard linear model, while being more interpretable than a general regression surface at the cost of approximation errors. Problems with AM include model selection, overfitting, and multicollinearity.

Description

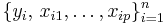

Given a data set  of n statistical units, where

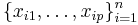

of n statistical units, where  represent predictors and

represent predictors and  is the outcome, the additive model takes the form

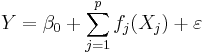

is the outcome, the additive model takes the form

or

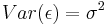

Where ![E[ \epsilon ] = 0](/2012-wikipedia_en_all_nopic_01_2012/I/c3795306a7237b6f276e2d73c77978fc.png) ,

,  and

and ![E[ f_j(X_{j}) ] = 0](/2012-wikipedia_en_all_nopic_01_2012/I/782cdbff173fffc28486e49c2481c14d.png) . The functions

. The functions  are unknown smooth functions fit from the data. Fitting the AM (i.e. the functions

are unknown smooth functions fit from the data. Fitting the AM (i.e. the functions  ) can be done using the backfitting algorithm proposed by Andreas Buja, Trevor Hastie and Robert Tibshirani (1989).

) can be done using the backfitting algorithm proposed by Andreas Buja, Trevor Hastie and Robert Tibshirani (1989).

See also

- Generalized additive model

- Backfitting algorithm

- Alternating conditional expectation model

- Projection pursuit regression

- Generalized additive model for location, scale, and shape (GAMLSS)

References

- Buja, A., Hastie, T., and Tibshirani, R. (1989). "Linear Smoothers and Additive Models", The Annals of Statistics 17(2):453–555.

- Breiman, L. and Friedman, J.H. (1985). "Estimating Optimal Transformations for Multiple Regression and Correlation", Journal of the American Statistical Association 80:580–598.

- Friedman, J.H. and Stuetzle, W. (1981. "Projection Pursuit Regression", Journal of the American Statistical Association 76:817–823

![E[y_i|x_{i1}, \ldots, x_{ip}] = \beta_0%2B\sum_{j=1}^p f_j(x_{ij})](/2012-wikipedia_en_all_nopic_01_2012/I/7a841b91c26a0e883c2a1a7ef4c5ac88.png)